Nissan’s Tetsuro Ueda: ‘Invisible-to-Visible tech also acts as a catalyst for a heightened level of interaction.’

At CES 2019, Nissan announced Invisible-to-Visible, a revolutionary technology that helps drivers ‘See the Invisible’ by merging the real and virtual worlds to create the ultimate connected-car technology.

Tetsuro Ueda is a technology expert at the Nissan Research Center, a place where Nissan explores cutting-edge technologies. Working with a small, but dedicated team, Ueda's research focuses on connecting technology and humans in an enriching, seamless way. His latest development is set to create new insights in what we see through connected platforms and sensing technologies.

At the CES 2019 trade show which opened today, Nissan announced Invisible-to-Visible, a revolutionary technology that helps drivers ‘See the Invisible’ by merging the real and virtual worlds to create the ultimate connected-car technology. In a recent interview, Ueda expanded on his vision of how he sees society interacting with Invisible-to-Visible technology.

Can you tell us briefly about Invisible-to-Visible technology?

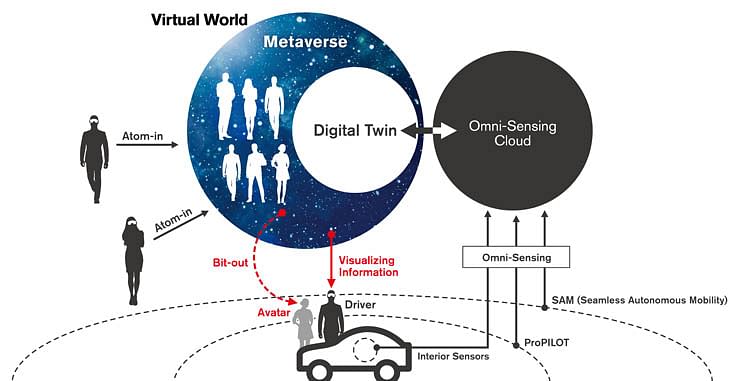

Invisible-to-Visible, or I2V, is our way of increasing awareness and enhancing the driving experience. By merging our world with the virtual world, called the Metaverse, we can create the ultimate connected-car experience. Sensing data, gathered from the space around us as we drive, and interactions with virtual information are brought to life by three-dimensional, augmented visuals in front of us. In essence, we can interact with and see information that would otherwise be invisible to us.

The Invisible-to-Visible (I2V) system is powered by data gathered by car sensors and online information provided by connected devices.

How is this different from similar technologies being developed by others?

In terms of interacting with digital devices thus far, technology has fundamentally just added voice to the display of information on a flat screen. I2V technology uses a method that goes beyond this type of conventional provision of information. It is true, some companies are researching three-dimensional displays of information in vehicles similar to our technology. The differentiator is our new approach of presenting data or avatars as MR (mixed reality). This increases the level of interaction and gives virtual elements weight in our reality. Another factor is our goal of presenting information and data in a human-like way.

Can you expand on that? How's this different from the virtual personal assistants (VPAs) on the market today?

While those VPAs focus on improving the efficiency of user assistance functions through AI, the agent-type avatars offering services through the Metaverse – we call them Traverse-Agents – differ entirely as they emphasize interaction between people, rather than efficiency. VPAs serve as functional assistants. Traverse-Agents, meanwhile, don't stop at functionality and are instead a partner in the mobile vehicle space. This concept fully utilizes a multitude of I2V sensing technology, such as Omni-Sensing, which gathers data from traffic infrastructure and sensors around the vehicle and the Metaverse to meet a variety of needs. These can range from a casual conversation partner to driving guidance, language study, business and personal consulting and counseling, all done in the same shared space as the user.

Some information received through Omni-Sensing includes traffic data. How is this different from devices and apps that provide similar information?

It isn't necessary to access the Metaverse to get traffic information. This can be handled by accessing a traffic information Cloud and visualizing the data – which doesn't go beyond the scope of existing connected cars that link to the Cloud. I2V takes that data and adds a higher level of detail from data collected by Omni-Sensing technology. For example, not only can we get information about traffic congestion ahead; we can know why it's congested, what's the best lane to take, and what are the possible alternative routes to avoid the area altogether. Having this knowledge, the knowledge of the unknown ahead, can ease driving stress.

The CES demonstration will show how people from the Metaverse can join as an avatar inside the car. Can they take control of the vehicle?

In autonomous driving conditions, we envision a special operator having the ability to give instructions to automated-driving vehicles, along the lines of the SAM concept announced by us at CES 2017. But we're not thinking about transferring control of driving (accelerator, brake and steering) operations. It might be possible to share functions other than those involved in driving, such as operating the air conditioner or infotainment system, in order to enhance the presence and quality of the shared travel experience.

With the appeal of having a driving experience through virtual worlds, do you think that actually driving a car still has an appeal? Will car ownership decrease?

Cars satisfy people's desire for mobility. A core feature of I2V technology is sharing the experience of mobility, but it also acts as a catalyst for a heightened level of interaction. This technology encourages new interactions obtained through movement beyond just the real world, into the fast-growing Metaverse and its end users. It implies a dramatic rise in users who can engage in interaction in more situations. We hope that this technology will generate motivation to use cars in order to obtain the I2V user experience.

Furthermore, the experience of riding together in a virtual space could never match the experience of reality. Thus, we're not concerned about a decrease in the driving public. A driving experience merged with virtual experiences should instead encourage movement in the real world.

Are there any concerns about people in the car joining the Metaverse while driving?

It isn't possible to fully dive into the Metaverse while driving. This technology utilises elements of the Metaverse for users to engage in conversations with avatars as a bit-out presence – that is, a person from the Metaverse projecting their virtual self into the real world inside the car. It differs technologically from a full-on VR experience. While a full VR experience is possible, we think this type of usage doesn't work as a viable driving experience.

Can this technology work anywhere? Or just in cities with connected infrastructure?

It can be used anywhere with access to an internet environment. That said, it will have to wait for the introduction of 5G or later wireless technology to be used in moving vehicles. Once we have the technology and connection speed to manage it during driving, Invisible-to-Visible will enhance the mobility experience and open the door to infinite worlds.

Data: Nissan.com

RELATED ARTICLES

"Connectivity and ADAS will drive the next wave of disruption": Sundar Ganpathi

Tata Elxsi's CTO Sundar Ganapathi on how connectivity, ADAS, and data will define the next wave of automotive disruption...

INTERVIEW- Renault CEO Cambolive: 'India Is Renault' — Targets 3–5% Market Share by 2030

Renault is pursuing a fundamental reset of its India strategy, says brand CEO and Chief Growth Officer Fabrice Cambolive...

INTERVIEW: "EV Demand is Rebounding both in India and Around the Globe" - JLR's Rajan Amba

Jaguar Land Rover India MD Rajan Amba discusses the India–UK FTA, the company’s manufacturing plans, the upcoming Panapa...

By Autocar Professional Bureau

By Autocar Professional Bureau

08 Jan 2019

08 Jan 2019

25613 Views

25613 Views

Darshan Nakhwa

Darshan Nakhwa

Hormazd Sorabjee

Hormazd Sorabjee

Prerna Lidhoo

Prerna Lidhoo