New sensor models to help autonomous cars to 'see' road ahead more clearly

More accurate modelling of radar, LiDAR and ultrasound sensors in simulation will enable better validation before use on public roads.

UK-based Claytex, the international consultancy specialising in modelling and simulation, is developing a new generation of sensor models to improve the validation of autonomous vehicle (AV) systems. The new models will help eliminate tragic accidents such as the recent fatality involving an AV and a pedestrian pushing a bicycle in Arizona. One of the contributory factors to that event was failure of the AV’s sensors to correctly identify the obstacle ahead.

“The artificial intelligence (AI) in an AV learns by experience, so must be exposed to many thousands of possible scenarios in order to develop the correct responses. It would be unsafe and impractical to achieve this only through physical testing at a proving ground because of the timescales required,” explains Mike Dempsey, managing director, Claytex. “Instead a process of virtual testing in a simulated environment offers the scope to test many more interactions, more quickly and repeatably, before an AV is used on the public highway.”

The challenge has been to ensure that virtual testing is truly representative, and that the AV will respond the same on the road as it did in simulation. Just as a driving simulator must immerse the driver in a convincing virtual reality, the sensor models used to test an AV must accurately reproduce the signals communicated by real sensors in real situations.

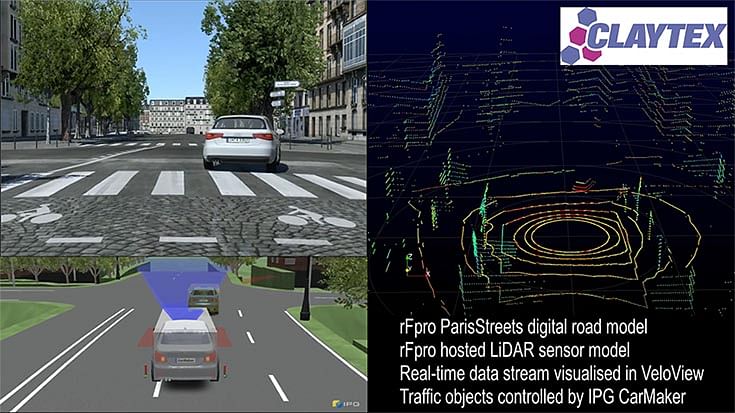

“We are initially developing a suite of generic, ideal sensor models for radar, LiDAR and ultrasound sensors, using software from rFpro, with a more extensive library to follow,” says Dempsey. “rFpro has developed solutions for a number of technical limitations that have constrained sensor modelling until now, including new approaches to rendering, beam divergence, sensor motion and camera lens distortion.”

rFpro software renders images using physical modelling techniques, the laws of physics, rather than the computationally efficient special effects developed for the gaming and film industries. This means that rFpro images don't just convince human viewers, they are also suitable for use with machine vision systems that must be fed sensor data that correlates closely with the real-world.

Physical modelling also obeys conservation of energy principles. When a LiDAR sensor model projects a pulse of energy into the scene, the return signals will never have more energy than the original. Traditional rendering techniques developed for the gaming industry often result in rendering that defies this constraint, making it impossible to achieve good correlation with real-world sensors.

rFpro also solves the beam divergence problem that afflicts almost all ray tracing systems. These work by sampling thousands of individual rays of light as they travel through the scene, requiring a trade-off between performance and quality. rFpro samples each point as a truncated cone that matches the shape of each LiDAR pulse, allowing everything that the beam strikes to be sampled, not just the individual rays selected.

As an autonomous vehicle travels along the road every bump, road repair and undulation has an affect on what the vehicle’s LiDAR sensor detects. Because rFpro uses very high quality surface definition – around 1mm in height and position – the effect of sensors moving through any particular scenario are easily captured and accommodated.

Claytex has found camera modelling to be very powerful in rFpro because the software supports different lens calibration models, allowing each customer to be supported without requiring them to change their preferred calibration methods. This includes calibration for lens distortion, chromatic aberration and support for wide angle cameras with up to a 360 degree field of view.

Even the weather conditions can be varied to reflect regional or seasonal changes. rFpro can adjust the lighting conditions of the simulation to match the angle and brightness of the sun at different times of day or different latitudes, reflections from a wet road surface and all weather types including snow and heavy rain. Experiments can therefore be run repeatedly, under different conditions, to ensure that sensor models are exercised in a wide variety of conditions.

“We have been really impressed with the results Claytex have achieved using our products,” says Chris Hoyle, Technical Director, rFpro. “We have also been pleased to have such a knowledgeable partner pushing us hard for new features, as that helps steer our future R&D in a direction that will benefit all our customers.”

RELATED ARTICLES

Autoliv Plans JV for Advanced Safety Electronics With China’s HSAE

The new joint venture, which is to be located strategically near Shanghai and close to several existing Autoliv sites in...

JLR to Restart Production Over a Month After September Hacking

Manufacturing operations at the Tata Group-owned British luxury car and SUV manufacturer were shut down following a cybe...

BYD UK Sales Jump 880% in September to 11,271 units

Sales record sets the UK apart as the largest international market for BYD outside of China for the first time. The Seal...

By Autocar Professional Bureau

By Autocar Professional Bureau

08 Oct 2018

08 Oct 2018

12516 Views

12516 Views

Ajit Dalvi

Ajit Dalvi